Designing Human-Centered AI for Scalable Supervision to Support Invisible Work

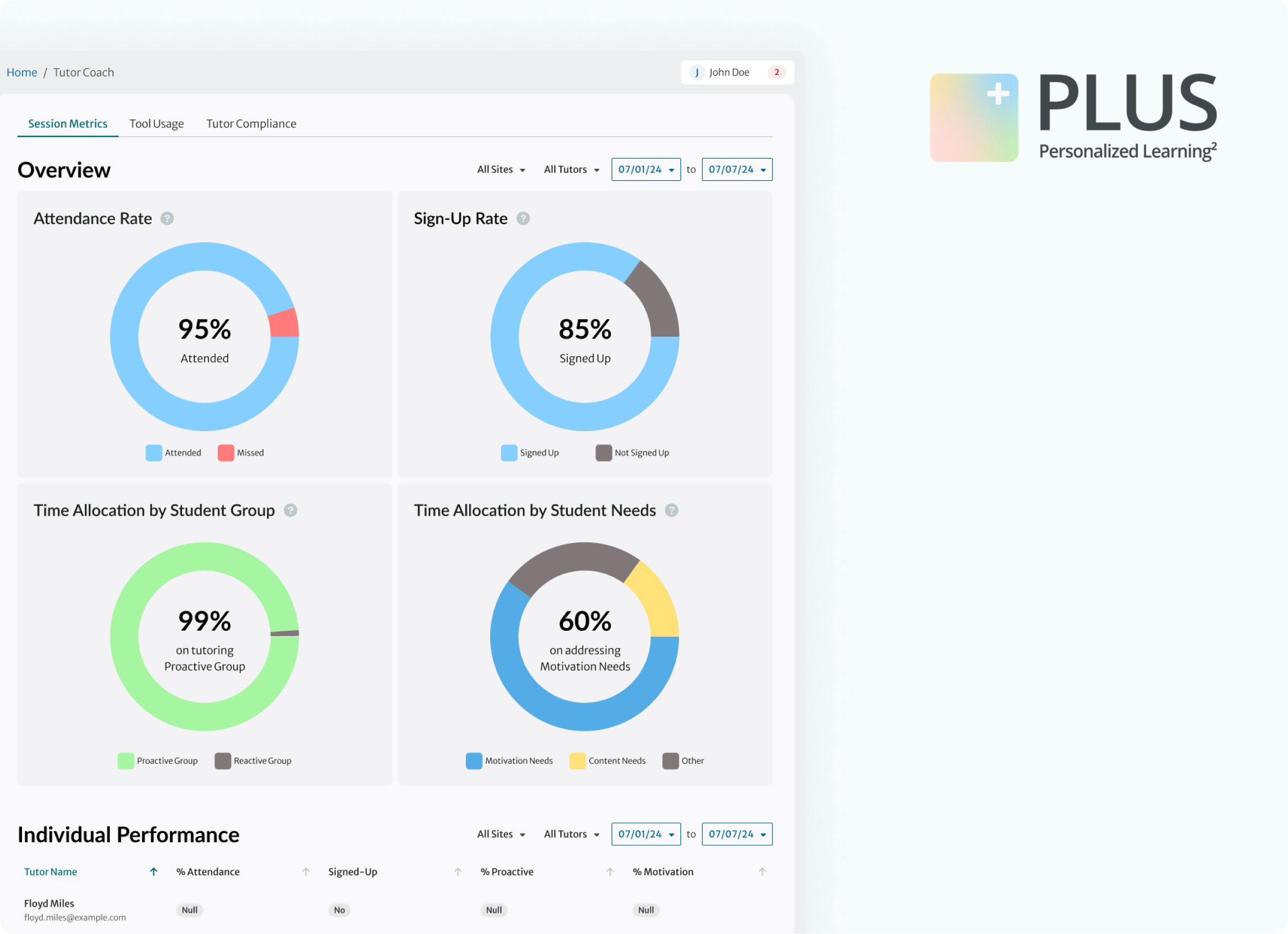

PLUS - Personalized Learning Squared, is a tutoring platform that combines human & AI tutoring to boost learning gains for middle school students from historically underserved communities. The platform supports over 3,000 students and 500 tutors, completing more than 90,000 hours of tutoring each month.

Instead of replacing human judgment, the tool amplifies human work without losing the human heart.

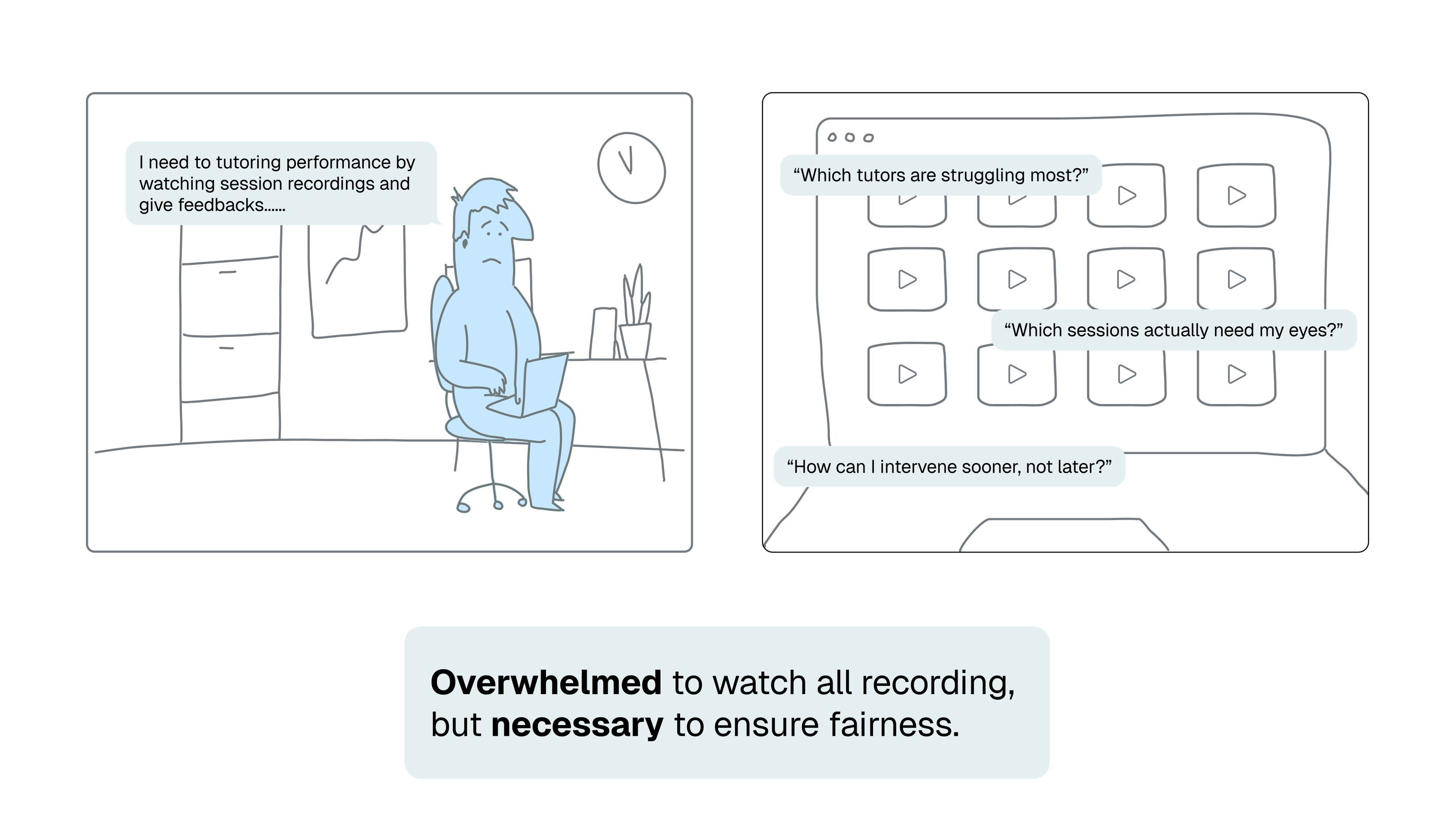

Watch LOTS OF zoom recording sessions.

For supervisors, manual reviewing 100+ sessions weekly was exhausting and inefficient—leaving no room for deep insights or proactive coaching.

How might we automate the tedious parts of supervision?

Ease this burden, but designing AI?

We saw an opportunity to ease this burden by automating low-value tasks.

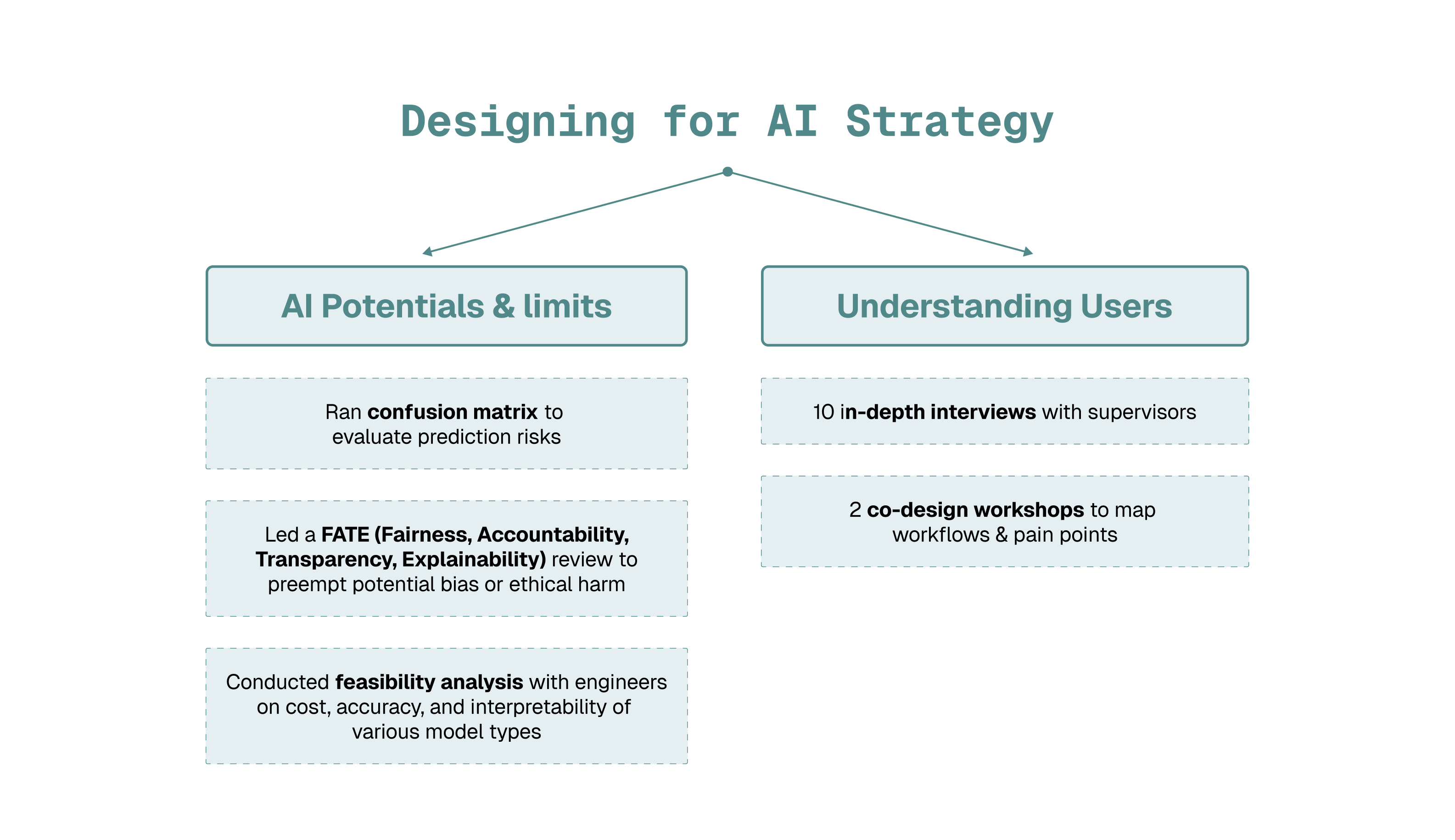

Before jumping into design, I partnered with researchers and engineers to align on how AI could responsibly support this shift—without compromising human oversight.

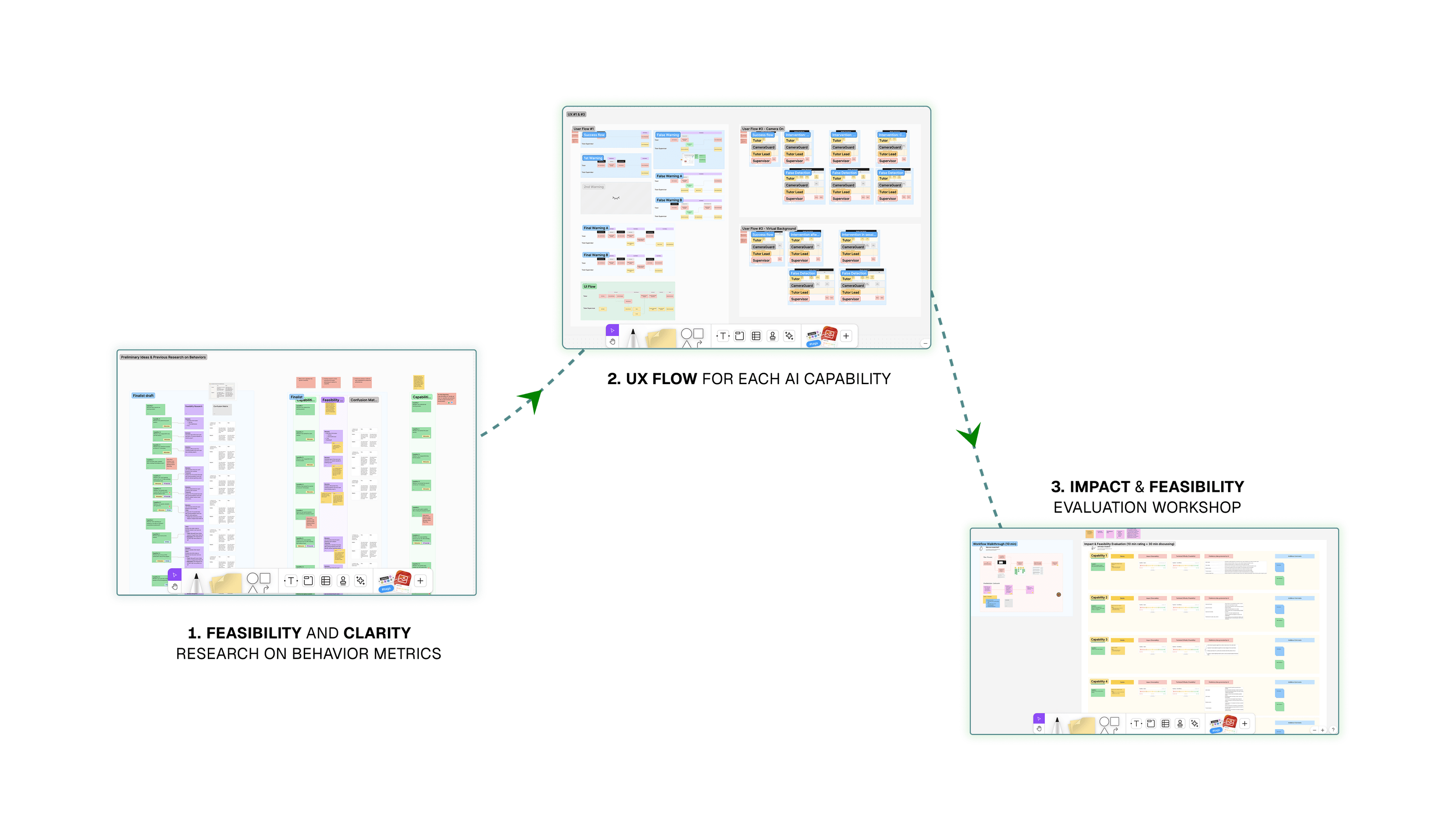

We're crafting the narrative by understanding AI usages and edges.

We using confusion matrix ,user flows, and study on capabilities to understand AI usage.

We're crafting the narrative by fitting humanity-in-the-loop.

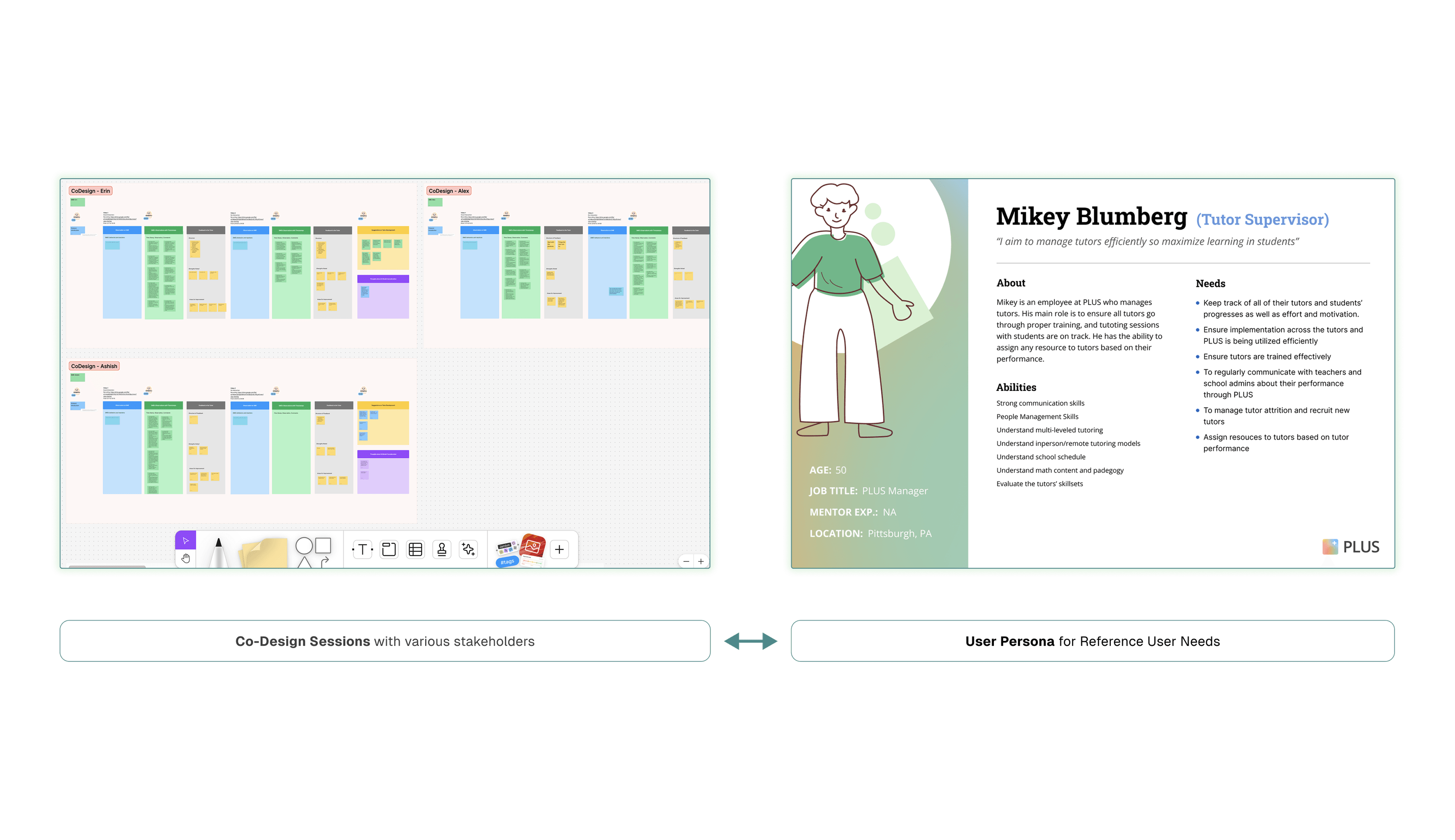

We conducted co-design with end-users to align what they expect for AI to help.

What should we follow to bridge between technical & humanity?

After conducing AI evaluations and talk with end users, we should focus on those informed AI design principles.

Where AI Could Help, What to Leave to Humans

From user interviews and technical feasibility reviews, we identified clear opportunities for AI to reduce manual workload—without overstepping human judgment.

Supervisors didn’t need AI to evaluate emotional nuance or replace their decisions; they needed help surfacing what matters most.

Solution #1

Can AI understand "engagement?"

Supervisors were spending hours watching Zoom recordings to gauge whether tutors were engaged.

They wanted help detecting signals like “warmth” or “proactiveness,” which led us to initially use AI models to score sentiment, tone, and conversational pace.

Unreliable, Biased & Infeasible

Our early approach faced multiple challenges that Ai can't do because models were unreliable, biased, and technically infeasible for product development.

AI into a tracker, not an enforcer

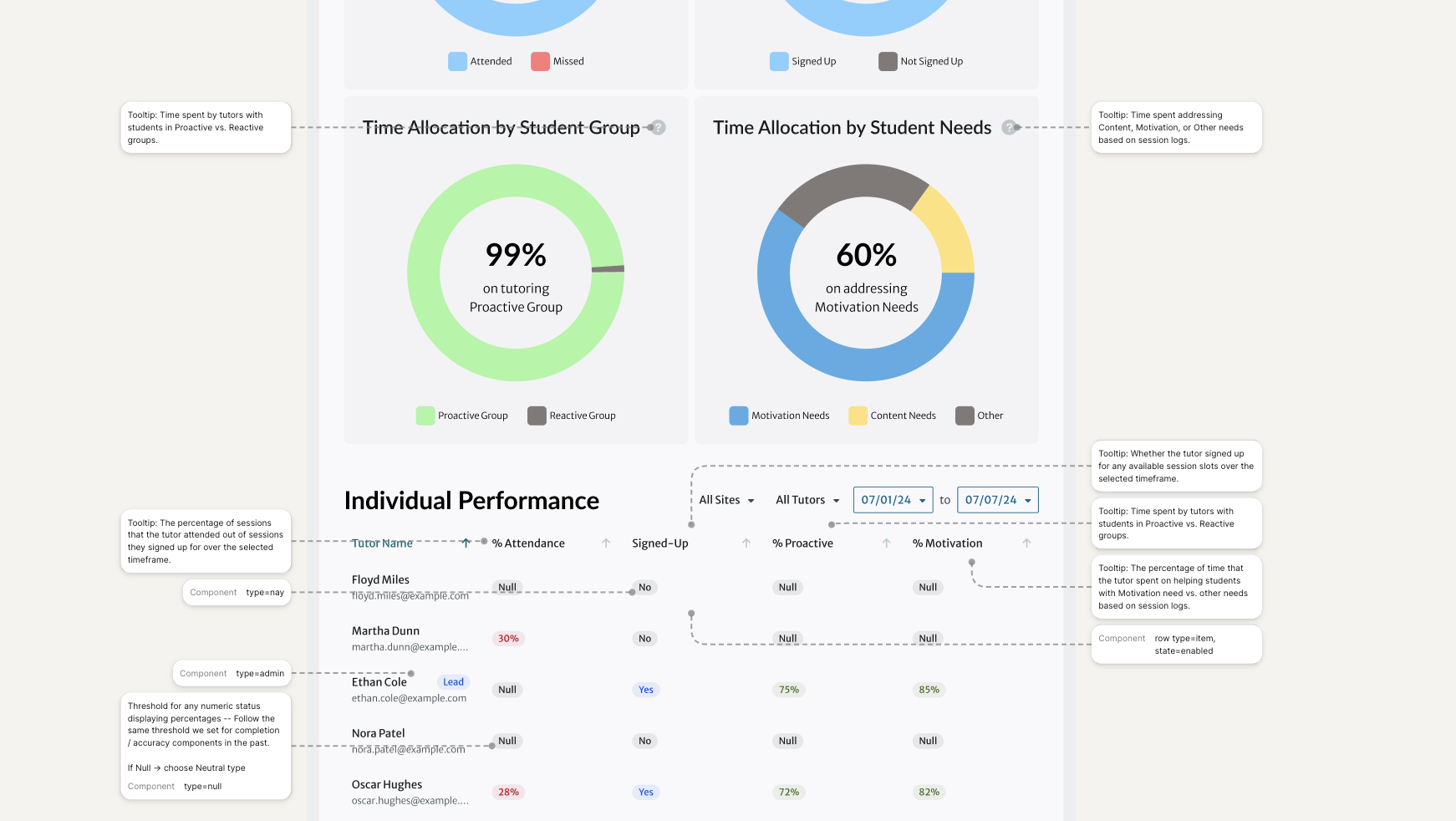

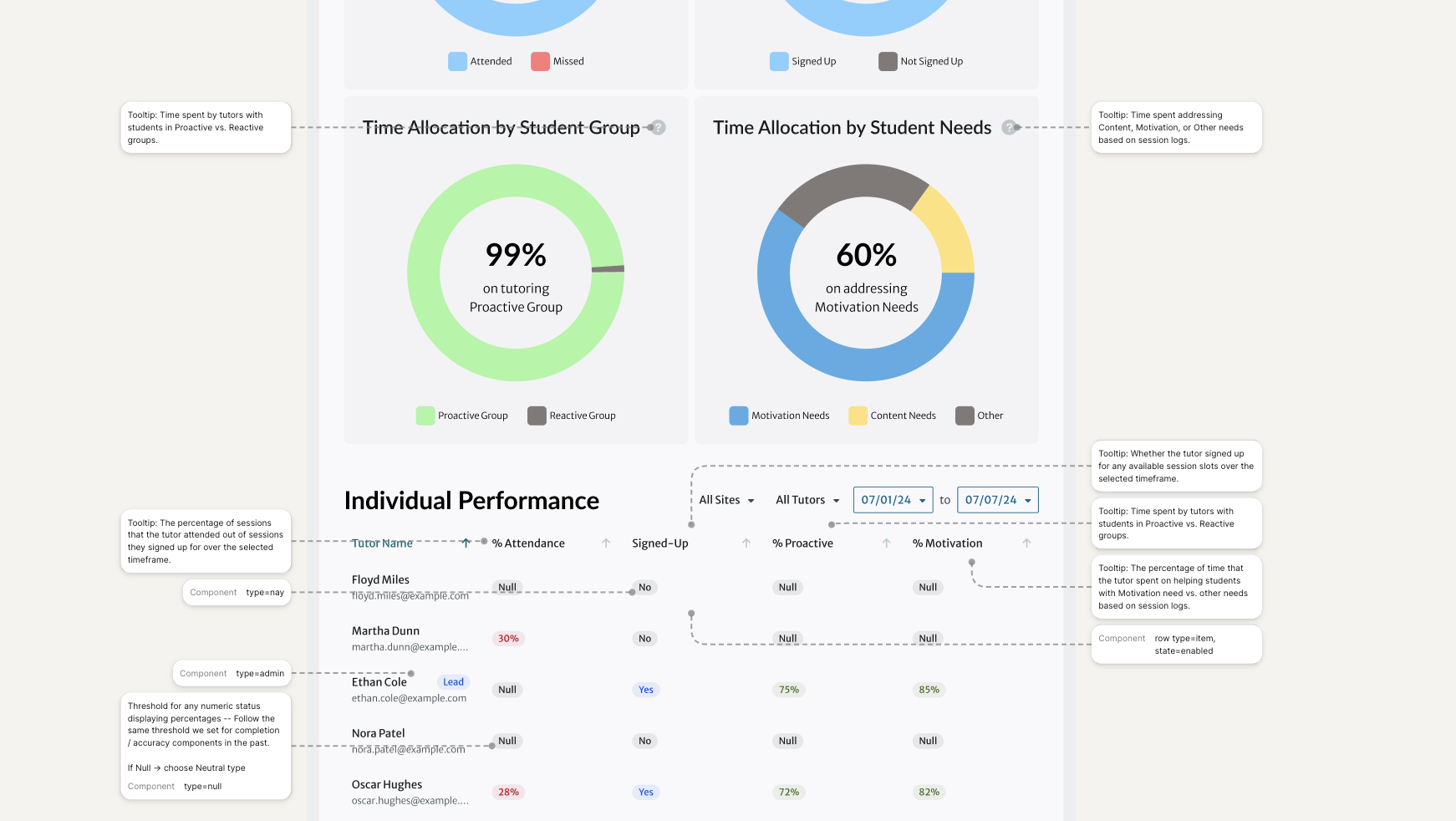

We redesigned the system to surface tutor-level performance patterns while keeping humans in control: AI identifies repeated issues but doesn't act on them.

Supervisors receive trend summaries and alerts in the dashboard and they can override and notes ensure decisions.

Solution #2

Tracking Tutor is Manual

Supervisors were manually documenting performance issues like no-shows by checking Excel sign-up sheets and matching them with Zoom attendance logs.

It was time-consuming and unreliable to track patterns at the tutor level—supervisors could only see if a single session was missed, not how often someone missed sessions overall.

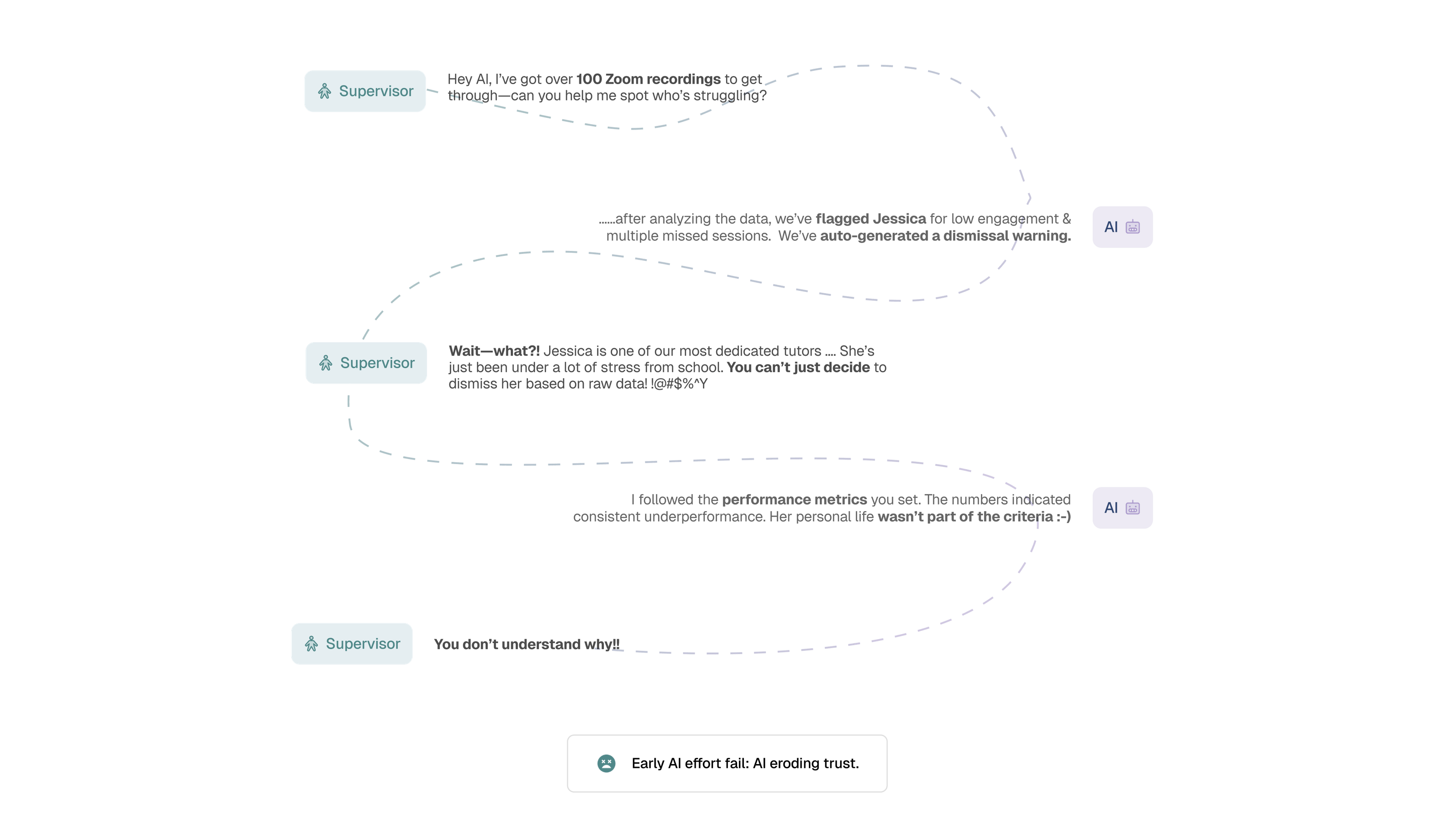

AI makes harsh decisions

Early AI models were too opaque or made decisions on their own, eroding trust. Supervisors wanted assistance, not automation without explanation.

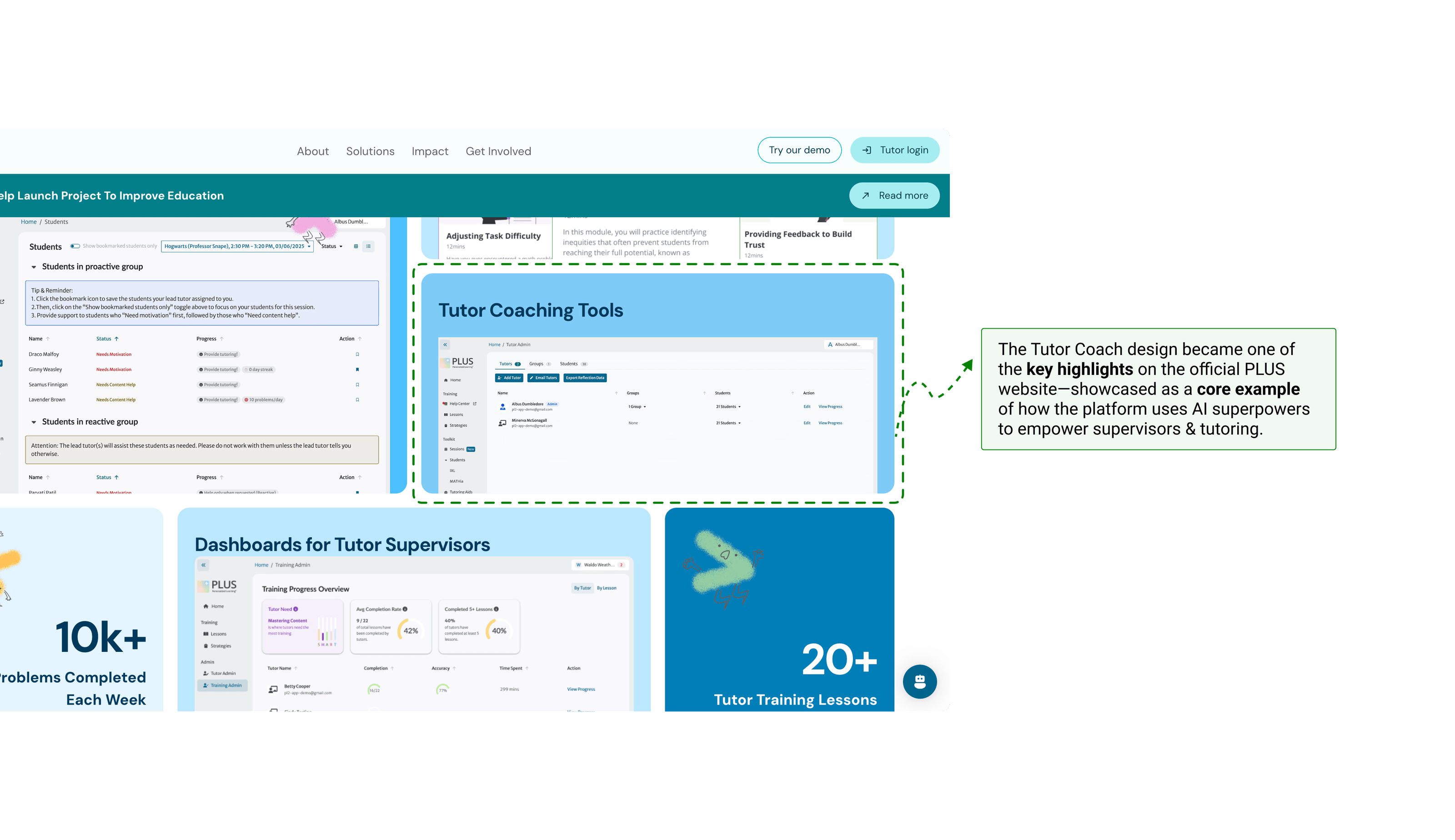

Tutor Coach brought measurable impact across the PLUS platform

Tutors received fairer, more transparent feedback, while supervisors saved time by focusing only on sessions that needed attention.

(1) Ai and Humanity?

AI doesn’t need to feel human—it needs to make humans feel confident.

(2) Great AI UX means knowing what not to automate.

The value of AI isn’t just speed—it’s clarity, focus, and trust.